Роботы FanCheng ICub постепенно учатся мимике с помощью алгоритмов

Опубликован в:2020-04-22

As robots enter various environments and integrate into our lives, they begin to interact with humans regularly. They need to communicate with users as effectively as possible. Therefore, in the past decade or so, researchers around the world have been developing machine learning based models and other technologies that can enhance human-computer interaction.

One way to change the way robots communicate with human users is to train them to express basic emotions, such as sadness, happiness, fear and anger. The ability to express emotions will eventually enable robots to communicate information more effectively in a manner consistent with a given situation.

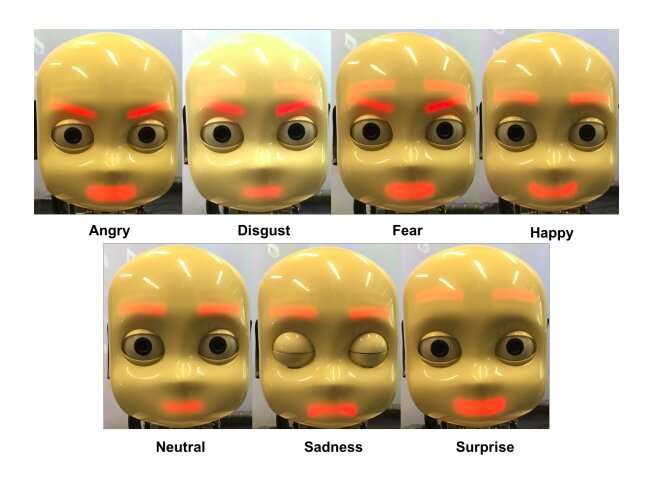

Researchers at the University of Hamburg in Germany recently developed a method based on machine learning, which can teach robots how to communicate what was previously defined as seven universal emotions, namely anger, disgust, fear, happiness, sadness, surprise and neutrality. In a paper previously published on arXiv, they applied and tested the technology on a humanoid robot called iCub.

The new method proposed by the researchers draws inspiration from the previously developed tamer framework. Tamer is an algorithm that can be used to train multilayer perceptron MLP (an artificial neural network ANN).

In recent research, the tamer framework is suitable for training machine learning based models to convey different human emotions by generating different facial expressions in the iCub robot. ICub is an open source robot platform developed by the research team of the Italian Institute of Technology (IIT). It is part of the EU project robotcub, which is often used in robot research to evaluate machine learning algorithms.

"The robot combines convolutional neural network (CNN) and self-organization map (SOM) to recognize emotion, and then learns to use MLP to express emotion," the researchers wrote in the paper "Our goal is to teach a robot to respond appropriately to users emotional perception and learn how to express different emotions."

The researchers used CNN to analyze the images of human users facial expressions captured by the iCub robot. Then, the facial feature representation generated by this analysis is input into SOM, which reveals the specific pattern of how the user expresses specific emotions.

Then, these patterns are modeled and used to train MLP to predict how to adjust the facial features of iCub to best imitate the users facial expression. Then, human users reward the robot according to the accuracy of robot emotion expression.

"Once iCub executes and takes action, users will be rewarded to provide it with target value," the researchers explained in the paper "This is done by asking the user to imitate the robot and providing it with information about how different the action performed is from the expected action."

Over time, based on the rewards from human users, the framework designed by researchers should learn to express each of the seven universal emotions. So far, this technology has been evaluated in a series of preliminary experiments using the iCub robot platform, and considerable results have been obtained.

"Although the results are encouraging and greatly reduce the time required for training, our method still requires more than 100 interactions per user to learn meaningful expressions," the researchers said "With the improvement of training methods and the collection of more training data, this number is expected to decrease."

One way to change the way robots communicate with human users is to train them to express basic emotions, such as sadness, happiness, fear and anger. The ability to express emotions will eventually enable robots to communicate information more effectively in a manner consistent with a given situation.

Researchers at the University of Hamburg in Germany recently developed a method based on machine learning, which can teach robots how to communicate what was previously defined as seven universal emotions, namely anger, disgust, fear, happiness, sadness, surprise and neutrality. In a paper previously published on arXiv, they applied and tested the technology on a humanoid robot called iCub.

The new method proposed by the researchers draws inspiration from the previously developed tamer framework. Tamer is an algorithm that can be used to train multilayer perceptron MLP (an artificial neural network ANN).

In recent research, the tamer framework is suitable for training machine learning based models to convey different human emotions by generating different facial expressions in the iCub robot. ICub is an open source robot platform developed by the research team of the Italian Institute of Technology (IIT). It is part of the EU project robotcub, which is often used in robot research to evaluate machine learning algorithms.

"The robot combines convolutional neural network (CNN) and self-organization map (SOM) to recognize emotion, and then learns to use MLP to express emotion," the researchers wrote in the paper "Our goal is to teach a robot to respond appropriately to users emotional perception and learn how to express different emotions."

The researchers used CNN to analyze the images of human users facial expressions captured by the iCub robot. Then, the facial feature representation generated by this analysis is input into SOM, which reveals the specific pattern of how the user expresses specific emotions.

Then, these patterns are modeled and used to train MLP to predict how to adjust the facial features of iCub to best imitate the users facial expression. Then, human users reward the robot according to the accuracy of robot emotion expression.

"Once iCub executes and takes action, users will be rewarded to provide it with target value," the researchers explained in the paper "This is done by asking the user to imitate the robot and providing it with information about how different the action performed is from the expected action."

Over time, based on the rewards from human users, the framework designed by researchers should learn to express each of the seven universal emotions. So far, this technology has been evaluated in a series of preliminary experiments using the iCub robot platform, and considerable results have been obtained.

"Although the results are encouraging and greatly reduce the time required for training, our method still requires more than 100 interactions per user to learn meaningful expressions," the researchers said "With the improvement of training methods and the collection of more training data, this number is expected to decrease."