Fan Cheng Computer vision technology helps robots grasp transparent objects better

Опубликован в:2020-07-16

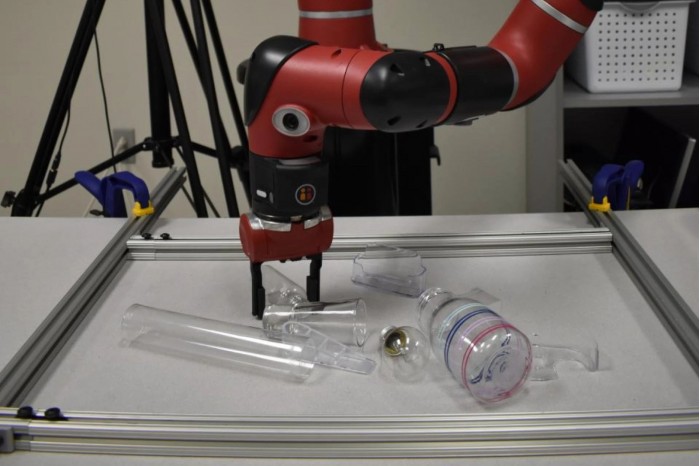

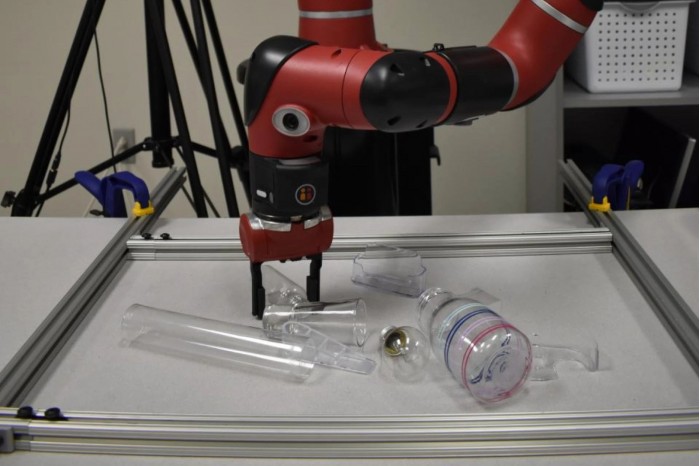

According to foreign media reports, in order to see and grasp objects, robots usually use depth sensing cameras such as Microsoft Kinect. Although the camera may be affected by transparent or luminous objects, scientists from Carnegie Mellon University have developed a solution. The function of the depth sensing camera is to shoot the infrared laser beam onto the object, and then measure the time required for the light to be reflected back from the contour of the object and then reflected to the sensor on the camera.

Although the system works well on relatively dim opaque objects, it has problems with transparent objects because most light passes through transparent objects, or shiny objects scatter reflected light. This is where the Carnegie Mellon system works. They use a color optical camera, which can also be used as a depth sensing camera.

The device uses an algorithm based on machine learning, which can be trained on the depth perception and color image of the same opaque object. By comparing the two types of images, the algorithm learns to infer the three-dimensional shape of objects in color images, even if these objects are transparent or luminous.

In addition, although only a small amount of depth data can be determined by direct laser scanning of such objects, the collected data can be used to improve the accuracy of the system.

In the current tests, robots using the new technology performed much better in grasping transparent and luminous objects than using only standard depth sensing cameras.

Professor David Held said: "although we sometimes miss it, it does well to a large extent, which is better than any previous system for grasping transparent or reflective objects."

Although the system works well on relatively dim opaque objects, it has problems with transparent objects because most light passes through transparent objects, or shiny objects scatter reflected light. This is where the Carnegie Mellon system works. They use a color optical camera, which can also be used as a depth sensing camera.

The device uses an algorithm based on machine learning, which can be trained on the depth perception and color image of the same opaque object. By comparing the two types of images, the algorithm learns to infer the three-dimensional shape of objects in color images, even if these objects are transparent or luminous.

In addition, although only a small amount of depth data can be determined by direct laser scanning of such objects, the collected data can be used to improve the accuracy of the system.

In the current tests, robots using the new technology performed much better in grasping transparent and luminous objects than using only standard depth sensing cameras.

Professor David Held said: "although we sometimes miss it, it does well to a large extent, which is better than any previous system for grasping transparent or reflective objects."